- #File deduplication software linux Patch

- #File deduplication software linux professional

- #File deduplication software linux series

- #File deduplication software linux free

“104th Congress, United States of America. Toncheva, “The diverse and exploding digital universe: An updated forecast of worldwide information growth through 2011,” IDC, An IDC White Paper - sponsored by EMC, March 2008. Linux kernel documentation: kernel/Documentation/rt-mutex.txt.

#File deduplication software linux Patch

Linux Real Time Patch Review - Vanilla vs. contents/pdf/EtherCAT_ Introduction_en.pd%f. Linux kernel documentation: kernel/Documentation/cpusets.txt.ĮtherCAT Technical Introduction and Overview. The Design of the UNIX Operating System - M. Understanding the Linux Kernel by Daniel P. An also as this method is block level elimination it elimination ratio is also good and good save of storage space.

This is how enterprises and big organization can save space as there data is growing exponentially in their field. So by using this method we can eliminate redundant allocation of data blocks, as result we can save the space and increase the efficiency of the storage space.

#File deduplication software linux free

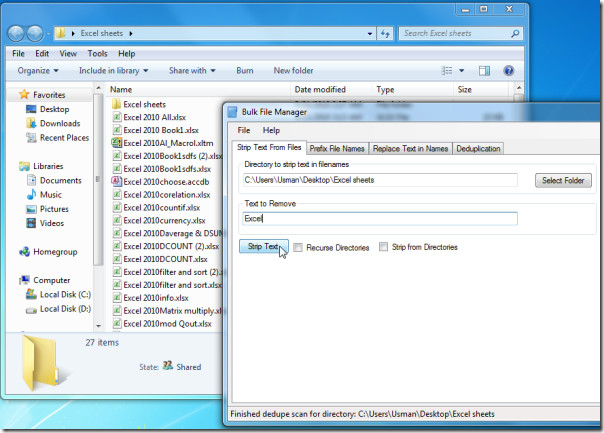

Whenever the key is not present in that case key is stored and the control is passed to superblock which allocates the free blocks, from the list which it contains and then returns the allocated block numbers to table where they are stored corresponding there key and the counter is also incremented. Then this key is compare with already stored keys in the table, it the key is already present then in that case only the corresponding counter of the key is modified or incremented, this counter is basically used to keep track of count of pointers that are pointing to block on the physical device. Every time whenever the new data comes it is given to sha1 before allocating any blocks for it and the key is generated. The hash key is generated using sha1 algorithm. In our method Inline data deduplication we create a table to store a hash key, and the corresponding block number, which contains the data for that hash key. Ext4 is latest file system which is used in Linux, which is having so many new features, so we are modifying Ext4 and adding this one more feature called as Data Deduplication. So we are modifying the file system so that it can eliminate the redundant block of data before storing to the secondary space which is also called as Inline Data Deduplication.

As in every operating system the storage space is manage by file system or we can say data is stored on secondary storage space by file system.

#File deduplication software linux professional

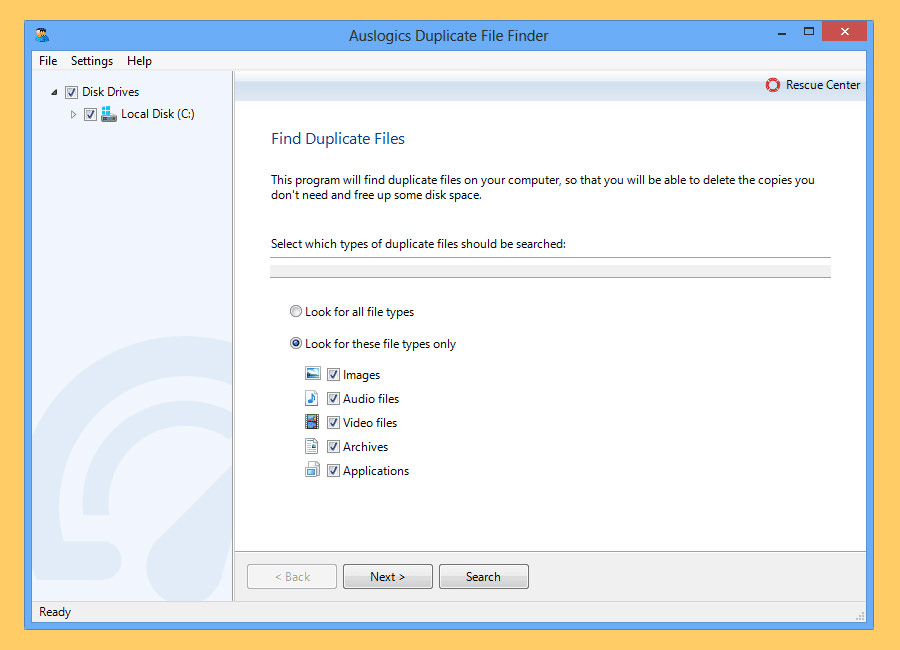

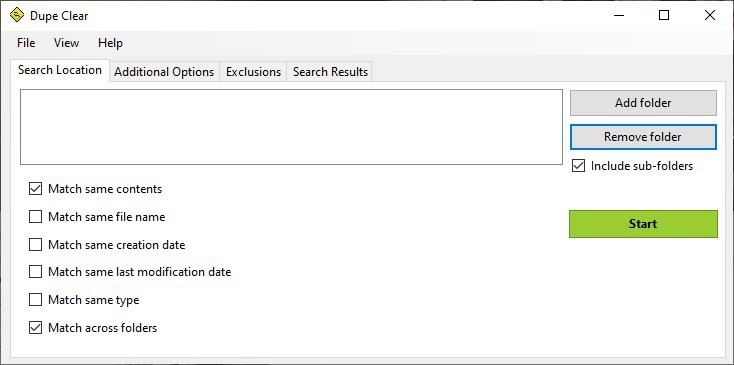

Not every one of the functions described above is available in the Professional Edition (USD 398). Various functions for the preparation of address- and other databases, such as a case correction, a function to replace terms, and a function to delete individual records systematically. A project history, which delivers information about what was done when with a given project. Numerous options for the further processing of the matching results: beside the direct deletion in the source file, it is also possible to flag the results in the source file, or to process further using a stored procedure, a duplicate-, results- or archive-file. User-defined universal matching, that can be used for any form of data. Option to compare with blacklists and the enhancement of an address database using the comparison results with data from a second address database. The postal address (error-tolerant matching), telephone number, email address, address or customer number and tax number can all serve as matching criteria. Fast, largely automated and thus easy to use comparison of address databases for double addresses. Here is a brief overview of the features of DataQualityTools: And through the consideration of advertising black lists, you can also avoid trouble with advertising recipients who do not wish to receive advertising. But it also improves the outward image of your company. Of course, this saves significantly on your expenses. This allows you, in the case of direct marketing, to avoid double solicitations and the redundant maintenance of customer addresses and other records.

#File deduplication software linux series

The central components are a series of functions which help to find duplicate records and, above all, a function for error-tolerant doublet search based on postal addresses. DataQualityTools is a collection of tools to help businesses improve the quality of their databases.

0 kommentar(er)

0 kommentar(er)